In this work, we introduce an intelligent agent that can help humans learn a new subject by intelligently scheduling the introduction of new concepts from that subject to the learner. We explore the application of this agent on the task of language learning wherein we aim to help a learner improve their command on a new language. The agent keeps track of the current knowledge and capability of the learner and intelligently selects exercises for the user based on this current knowledge state. Our work aims to provide a method to deduce the knowledge state of a user using the user's task history, such as past mistakes and results from tests.

Our method involves a knowledge tracing model combined with a reinforcement learning agent to select the best possible next exercise for the user. The tested model significantly outperforms a random policy, which was selected as our baseline comparison. These successfully results demonstrate the capabilities of reinforcement learning as a viable technique for online learning modules. We have made all code and pre-trained models from this research public to encourage further research and development in this domain.

One of the best ways to learn any subject or topic is to be incrementally exposed to different concepts of the subject over time. This iterative learning needs to be done in such a way that the concept introduced at every step is of a slightly higher complexity as compared to the concepts that the learner already knows. This means that the learning process can be enhanced for a learner if the concepts that are introduced in each step are scheduled according to the current knowledge state of the learner. The same is true if a learner is trying to learn a new language. One of the most effective ways to learn a new language is through immersion, but not everyone has access to language immersion programs. We plan to tackle this by developing an intelligent agent that can carry out personalized conversations with the learner.

This project would have two major components:

At every step, in order to personalize the conversations, the agent will keep track of the word-level mistakes that the learner makes and use this data to generate a representation of the knowledge state of the user (conversely, using the representation of the knowledge state we can predict the probability of a user making a mistake on a particular word in a sentence). This knowledge representation is used by the agent to generate relevant conversation.

The conversational agent is responsible for generating new conversations with the learner based on the current knowledge state of the user. We explore the applications of Sequence to Sequence models to generate the next dialogue for the conversation (where the previous reply from the user and the knowledge state of the user can be fed as the input to the model). We train the agent using Reinforcement learning algorithms (Q-learning) to find the best action to select a conversation that can help in improving the knowledge of the user. The reward for the reinforcement learning algorithm is based on the improvement in the knowledge state of the user after having a conversation with the agent.

Our contributions are summarized as follows:

We propose a novel architecture based on Reinforcement learning and Deep knowledge tracing that can help humans in language learning.

We explore novel previously unexplored architectures, especially based on attention, for Deep knowledge tracing in the task of second language acquisition. In particular, we focus on architectures that can be easily integrated with our RL agent.

We train and evaluate a Q-learning based RL agent to intelligently schedule conversations that outperforms the baseline in terms of both cumulative reward and sample efficiency.

This approach involves the use of knowledge tracing for understanding what the user learned based on their conversations with the bot. Prior works in Recurrent Neural Network(RNN) based Deep knowledge tracing (DKT) Piech et al. (2015), Transformer based DKT Pandey and Karypis (2019), GNN based DKTs Nakagawa et al. (2019); Song et al. (2022) show that deep knowledge tracing can be used effectively to model the current knowledge state of a student purely based on the performance of previous questions and the time taken to solve them. Given a new set of questions the DKT models predict if the student will be able to solve the question or not. Though these works are not focused on language learning, the approach can be used on language learning based on the dataset available from Duolingo.

Further, a Knowledge Graph based approach incorporating autoencoders to compute recommendations has been developed by Bellini et al. Bellini et al. (2018). The authors make use of a Knowledge Graph (KG) and build an autoencoder to estimate this graph which in turn tries to learn the user's learning patterns. Although this is not related to education, the approach ties really closely with what we're planning to achieve.

To improve the capability of knowledge graph completion tasks Zhang et al. introduced a new model architecture for relation graph neural networks Zhang et al. (2020). Their model–Relational Graph neural network with Hierarchical ATtention (RGHAT)–leverages information from local relationships in the knowledge graph. The use of attention to maintain neighborhood information allows the model to notably outperform previous state of the art models in knowledge graph completion tasks.

An additional method for improved knowledge tracing was implemented by Osika et al. (2018), which involves the ensemble of multiple independent knowledge tracing models Osika et al. (2018). This method has shown to provide knowledge tracing with greater accuracy than any single knowledge tracing model has been able to achieve. One of the individual models employed by Osika et al. (2018) is a gradient boosted decision tree using the light GBM framework. The light GBM framework was developed by Ke et al. Ke et al. (2017). The light GBM framework allows for training a decision tree using only a fraction of the dataset at a time. This makes it an ideal framework to train a decision tree on a large, sparse data set such as ours.

A Bayesian knowledge tracing (BKTs) Corbett and Anderson (2005) and Individualized BKT (I-BKT) Yudelson et al. (2013) models can also be used to simulate the learners understanding of a subject. Though DKTs outperform BKTs in knowledge tracing, BKTs can often be used when the number of samples is not sufficient to train a deep learning model. Bassen et. al. Bassen et al. (2020) uses BKT models to simulate student's understanding of the subject based on minimal test scores and samples. This is very useful when there is a human in a loop - where data is both online and real-time provided by the student using the learning app.

As a motivation for this research we use the work done by Ruan et al. Ruan et al. (2021) where they show that leveraging interactive chatbots can help in improving the language learning experience of students. The authors worked with 56 Chinese students who tried to learn English as a second language and showed that their performances improved more when using an interactive chatbot (as compared to traditional listen and repeat methods) when evaluated by the IELTS grading standard for voluntary learning.

Recent work by Bassen et al. Bassen et al. (2020) shows that reinforcement learning can be used to effectively schedule the learning activities of a person. The RL agent is trained to suggest the educational activities to maximise the total gain in knowledge and minimise total time consumed while in learning the subject. The policy is optimized using sample-efficient proximal policy optimization (PPO) which is very essential in the case where the number of samples from the users would be significantly low.

A sample efficient policy optimization is very essential in our case where the number of samples from the users would be significantly low. Model based re-inforcement learning have showed promising results for sample-efficiency without much compromise on the performance Wang et al. (2019). When it comes to policy optimizations Proximal Policy optimization(PPO) Schulman et al. (2017b), and Trust region policy optimization (TRPO) Schulman et al. (2017a), Deep deterministic Policy gradients Lillicrap et al. (2019)(DDPG), Twin delayed DDPG Fujimoto et al. (2018), have shown much better sample efficiency compared to the basic policy gradient optimizations.

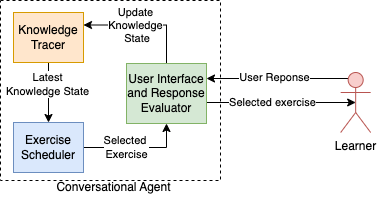

The overall vision that we have in mind for this project is to create an interactive agent that can converse with the users (either by asking questions or just generally discussing a topic) in a target language (which the users want to learn). The conversations that the agent generates should be tailored to the skill level of each user. In order to do that same, the agent needs to be able to have the following two components:

A knowledge tracing module that will keep track of the user replies and build a representation of the current skill level of the user

A conversation generator that will select the next sentence/unit of conversation on the basis of the current skill level of the user.

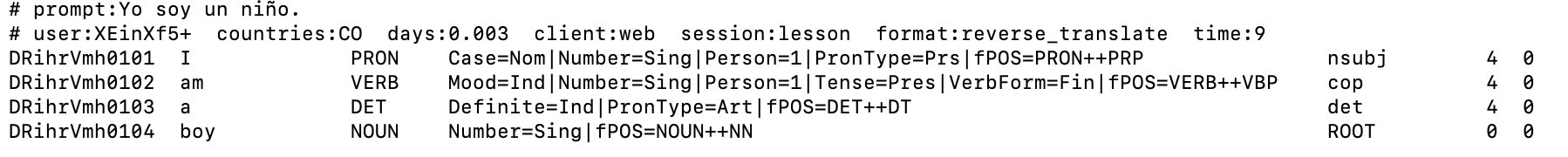

For the specific task of knowledge tracing and conversation generation targeted to language learning, we make use of the dataset for Second Language Acquisition modeling that was released by Duolingo Settles et al. (2018). For every user, the dataset consists of the history of interactions of the user (in terms of the exercises that have been completed) :

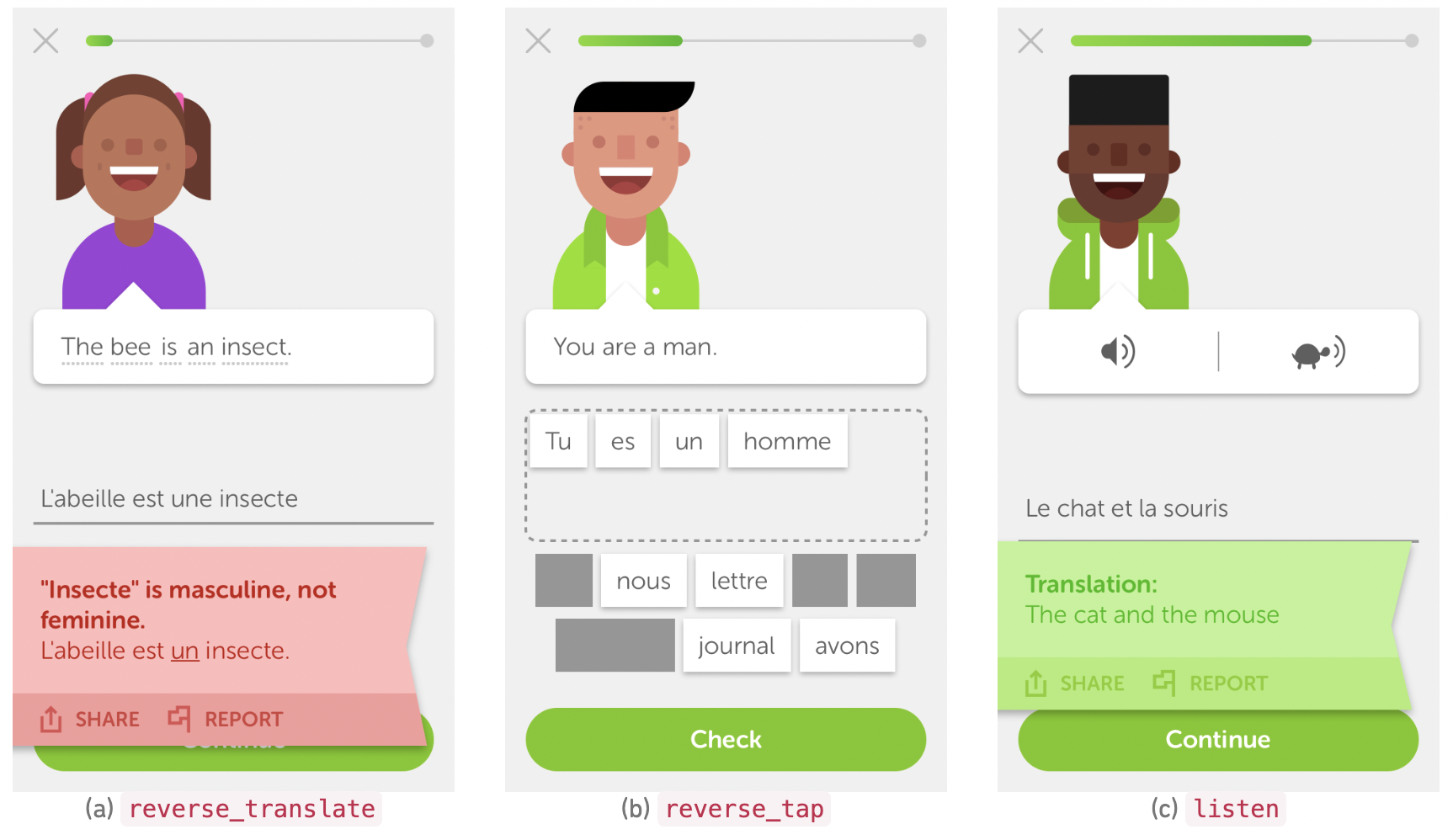

Each exercise can from one of the following formats:

reverse_translate: Given a language in the known language, translate it to the new language (being learned)

reverse_tap: Given a language in the known language, translate it to the new language (being learned) by selecting options from a given set of tags.

listen: Listen to a sentence in the language being learned and then transcribe it.

For each exercise, we are given the following:

The written prompt that was provided to the user in the known language (if the format was reverse_translate or reverse_tap).

The correct answer for the exercise (closest to the answer that was provided by the user).Due to the existence of synonyms, homophones, and ambiguities in number, tense, formality, etc., each exercise on Duolingo may have thousands of correct answers. Therefore, to match the student's response to the most appropriate correct answer from the extensive set of acceptable answers, Duolingo utilizes FSTs.

Labels for each token in the correct answer to indicate if the user got that token right or not

Morhopology labels for each token in the closest correct answer

Time taken by the user to complete the exercise.

Time since the user started learning that language

We will be using this dataset to train our knowledge tracing model as well as create the initial version of the conversation generator.

The expectation of the knowledge tracing model is as follows:

Given the history of the user, i.e. the set of exercises that have been completed by the user (along with the labels indicating whether or not the user got a particular token in the exercise correct) : the model should be able to predict the performance of the user on a new exercise

If the set of exercises that have been completed by the user along with the labels for each token in each exercise is given by E = and the new exercise that we are going to predict on is given by , then the goal of the knowledge module is to predict the following: where refers to the probability that the user will get the token incorrect.

In order to train our knowledge model, we make use of the tokens in the closest matching correct answer that has been provided in the dataset for each exercise. This is because the tokens corresponding to the correct answer actually represent the concepts that we want the user to learn (i.e. the concepts from the new language). We make use of the GloVe embeddings of these tokens in-order to make sure that we capture the meaning/essence of each of these tokens.

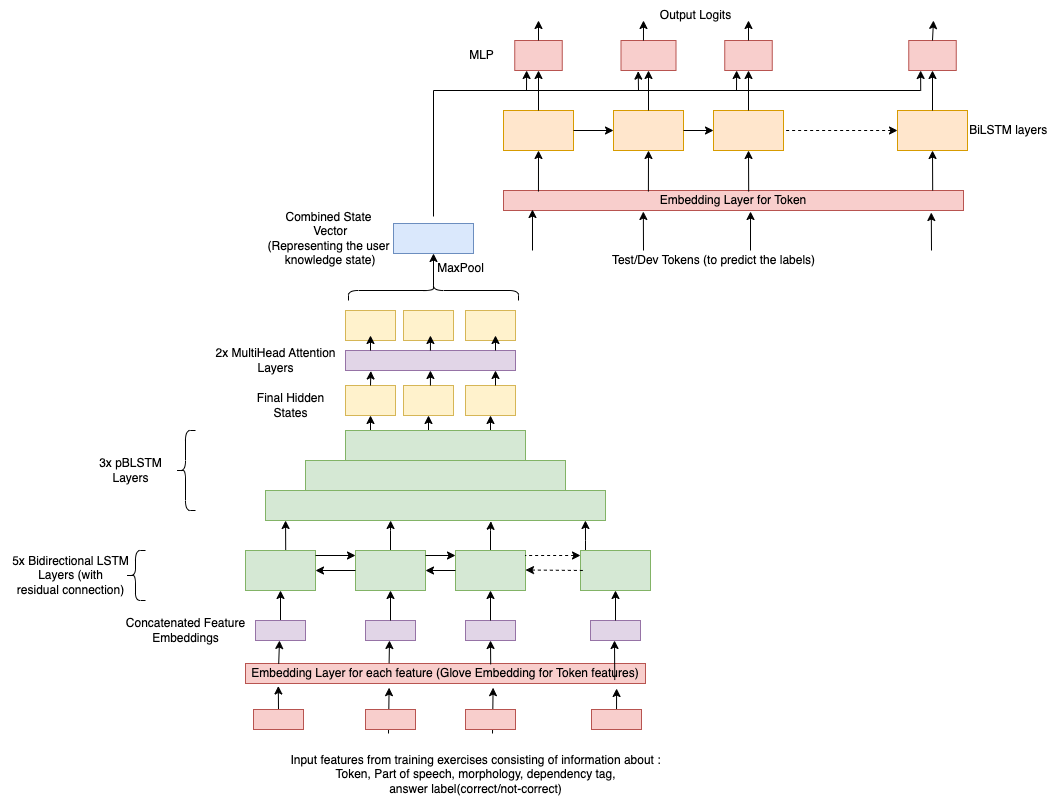

We propose to make use of an encoder-decoder architecture to develop our knowledge model. The

following figure provides a high level overview of the architecture of the knowledge model:

In our knowledge model, the encoder is responsible for creating a hidden representation of the knowledge state of the user and the decoder will be responsible for making use of that knowledge state and predicting the performance of the user on a new unknown set of exercises.

The overall architecture of the knowledge model can be summarized as follows:

The encoder consists of 5 layers of Bidirectional LSTM, followed by 3 pBLSTM layers to create a condensed representation of the input (user history).

We make use of 2 multi head attention layers on the outputs of the pBLSTM to take any long range dependencies between the inputs into consideration.

The output from the multi head attention layers is condensed into a single vector by making use of max-pooling. This single vector is a representation of the knowledege state of the user.

The decoder again, consists of 5 layers of Bidirectional LSTM to create a hidden representation of the test exercises (on which we want to predict the responses of the user). The decoder will create a single hidden representation for each token in the test exercises.

Finally, the decoder has a 3 layered Feed Forward network that takes in the hidden state (of the test token) along with the knowledge state of the user and then predicts the probability that the user will get that particular token incorrect.

Please refer to section 4 for a detailed explanation of the training of the knowledge module.

The goal of the conversational agent is to generate the next exercise that should be provided to the user such that the expected knowledge gain of the user is maximized. We followed an iterative approach to develop a Reinforcement Learning based agent that will generate the next exercise.

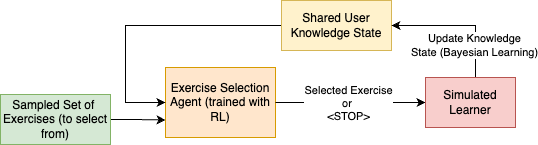

As the first step towards developing an interactive agent, we first developed a simple Reinforcement Learning based scheduling algorithm. The Agent in this case is responsible for choosing the best possible way to schedule the existing exercises from the Duolingo dataset such that the knowledge gain of the user is maximised.

In our implementation the reinforcement learning agent is trained using Q-learning. The following are some architectural details of the reinforcement learning agent:

The agent is actually implemented as a simple linear regression model that is responsible for modelling the Q-Value for any given (state, action) pair.

In our case, the state is actually the knowledge state vector of the user and the action space is the set of exercises that can be presented to the user. During training, the input to the RL agent consists of the vector representing the knowledge state of the user and the embedding of a candidate exercise which are computed using Sentence BERT.

We follow an greedy policy to train the reinforcement learning agent. In every iteration, the reinforcement learning selects a random action/exercise with probability and with probability , the agent iterates over the entire set of candidate exercises that are available, computes the Q value for each of them and then selects the one that has the maximum Q-value.

Please refer to section 4 for a more detailed explanation of the training procedure used for the reinforcement learning agent.

In the ideal scenario, the Reinforcement Learning algorithm (as mentioned above) would have to be trained on the outputs from human language learners. In the absence of such data, we will be making use of Simulated learners based on Bayesian Knowledge Tracing (BKT)Corbett and Anderson (2005) Bayesian knowledge tracing can be used to model the learning curves for the skills of each user. As per the original proposal in Bayesian Knowledge tracing, each "skill" is either in the learned state or the unlearned state. The following probabilities can be used to model the growth of a skill for a particular user:

The probability that the skill is in the learned state for the user at time 0.

The probability that the skill will transition from the Unlearned state to the learned state when a chance to apply the given skill is presented to the user.

The probability that the user will guess the correct answer to an exercise which requires the application of a skill that is currently in the unlearned state for the user

The probability that the user will slip and provide the incorrect answer to an exercise which requires the application of a skill that is currently in the learned state for the user

The probability that the skill is in the learned state for the user at time t.

Using the above probabilities, the probability that the user will give the correct answer to an exercise at time 't' is given as:

and once the user has provide the answer to an exercise, the probability that the skill will be in the learned state at time t+1 will be given as:

where refers to the posterior probability of the skill being in the learned state once we have seen the response () of the user to the exercise at time 't' and

In our case, we will make of a Simulated learner to test the policy that is being created by the Reinforcement agent and provide a reward accordingly. For our application, the Simulated learner (based on BKT) has been implmented using the following assumptions:

In order to correctly provide the answer to a particular token in an exercise (eg: provide the correct translation of a particular word), the user needs to have a complete understanding of the token itself along with the corresponding part speech , morphological features and dependency structure of the token.

Each of the possible values of the tokens, part of speech, morphological features, dependency structure are modelled as a skill in the BKT learner.

Once the user is presented with an exercise, first the response of the user to each token in the exercise is computed based on the present skill state of the user. Once we have the generated the answers to each token (which in this case would be whether or not the user provides the correct answer to a particular token), we update the skills associated with each token on the basis of the observed value.

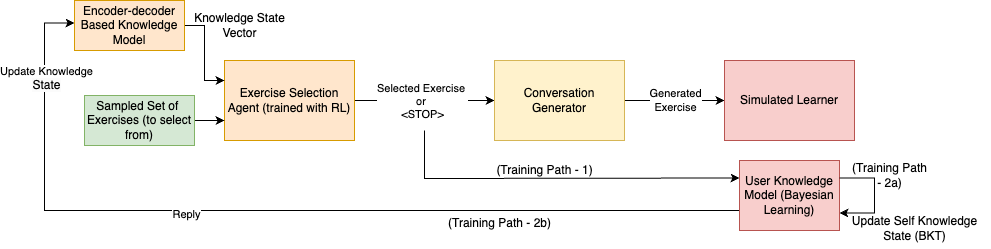

Once we had the core components (i.e. the Reinforcement Learning Agent and Knowledge tracing model) in place, we incorporated a conversational element to language learning. At this step instead of using a shared knowledge state between the simulated learner and the Reinforcement learning agent, we integrated the encoder-decoder based knowledge tracing model with the Reinforcement Learning based exercise scheduling agent. This allows the interactive agent to build a representation of the current skill set of the user over time and select the appropriate exercises. Once the RL agent has selected a particular exercise, it is fed through a Conversation Generator (that will be a Sequence to Sequence Model) that will be trained to convert the given simple exercise into a more conversational question. For eg. For a user learning English (using Spanish as the base language), if the RL agent indicates that the exercise with the corresponding English translation as "I am not perfect" is the best, the conversational agent can generate the sentence as "Can you please translate 'Yo no soy perfecto' into english for me"?

The conversation generator used is a Sequence-2-Sequence, pre-trained Google T5 model, that is fine-tuned on the prompts and the corresponding correct answers in the Duolingo dataset.

The overall training approach for the RL agent along with the encoder-decoder based knowledge tracing model will be the same as that mentioned in the previous section. The encoder-decoder based knowledge tracing model will be pre-trained and will be frozen while training the RL agent - it will only be used to generate the knowledge state vectors on the basis of the responses that are provided by the simulated learners.

The knowledge model makes use of the following end-to-end algorithm for training:

For a given user, we consider a randomly sampled sub-sequences of n = '256' exercises and divide it into 2 parts each with n/2 exercises. The first n/2 exercises (say set I) are used to represent the current history of the user and the rest of them (say set T) represent the exercise for which we want to predict the outcome. For each token in the set I, we extract the following features:

The embeddings for each token (we make use of GloVe embedding)

We project each of the other categorical features such as (part of speech tag, dependency label of the token (in the semantic parsing of the exercise) and the label of the token (i.e. whether the user got the token correct or not)) into an embedding vector.

All the above mentioned features are concatenated to form an input for the encoder.

The encoder hidden states from all timestamps are be condensed into a single vector using Max Pooling. This vector will represent the knowledge state of the user because it has information about the exercises that have been attempted by the user as well as the labels for each token in that exercise (i.e the information about whether the user got the token correct or not).

Now, once we have a condensed representation of the knowledge state of the user, we make use of this in the decoder to predict the performance of the user on a test set of exercises (i.e. the second subset T). The input to the decoder will be similar to the encoder except that the value of the 'label' for the exercise will be provided as 'unknown' (represented by the number '2' in the input since the values 0 and 1 represent the label for correct and incorrect responses respectively). The BiLSTM layers in the decoder will create a hidden representation for each token in the test set. This hidden representation at each time-step (i.e. for every token) is concatenated with the knowledge state representation (obtained from the encoder) and is then passed through an MLP network to get the final probability of the getting the token wrong.

The network is trained using the ground truth labels, for the test exercises, that are available to us in the training data.

During inference, we make use of the history of the user, that is available to us, to create the knowledge state vector (i.e. just make use of the encoder part of the network) since we are only interested in the representation of the knowledge state. (Note: The knowledge state of the user will be updated every time we get a response from the user for an exercise i.e. at every step of the interaction between our agent and the user. This is because the history of interactions of the user will be updated every time we provide a new exercise to the user and the user attempts it).

The training of the RL agent is done as follows:

All the exercises that are present in the training set of the Duolingo dataset were collected and then subdivided into multiple contiguous chunks of alternating teaching and testing data.The user will be "taught" using the teaching set and then evaluated using the testing set.

One pair of contiguous teaching and testing data subsets is used by the RL agent in a single episode.

Before the beginning of the episode, the user is be tested on the testing subset of the data and the score/accuracy of the user of the user is recorded. Next, the RL agent starts to schedule the exercises from the teaching subset for the user (using the greedy policy as mentioned before). At every step, the RL agent can either select one of the exercises from the teaching subset to present to the user or it can terminate the episode.

Once the episode terminates the user is evaluated again on the testing subset and the accuracy will be recorded.

By default the RL Agent is provided a reward of "-1" for every exercise that it schedules for the user. Once the episode terminates, the reward for the RL agent is computed as the difference difference between the final accuracy and the initial accuracy on the test subset of the data. This encourages the RL agent to schedule less number of exercises for the user while still ensuring that the knowledge gain of the user is not compromised.

This is repeated for each pair of teaching and testing subset of exercises in the dataset.

At every step (i.e. every time the RL agent selects an exercise and gets a reward) the linear regression model is updated using the gradient descent update rule for Q-learning.

Google's T5 pre-trained model that can be imported via HuggingFace was selected by us since it provides the most flexibility in it's retraining procedure and because of the plethora of documentation available for the same. Where the T5 is concerned, you can specifically train it for a dialogue generation task as well; which is what we want to do. This in turn would be connected to the RL agent which would be able to feed it the exercises chosen by the agent.

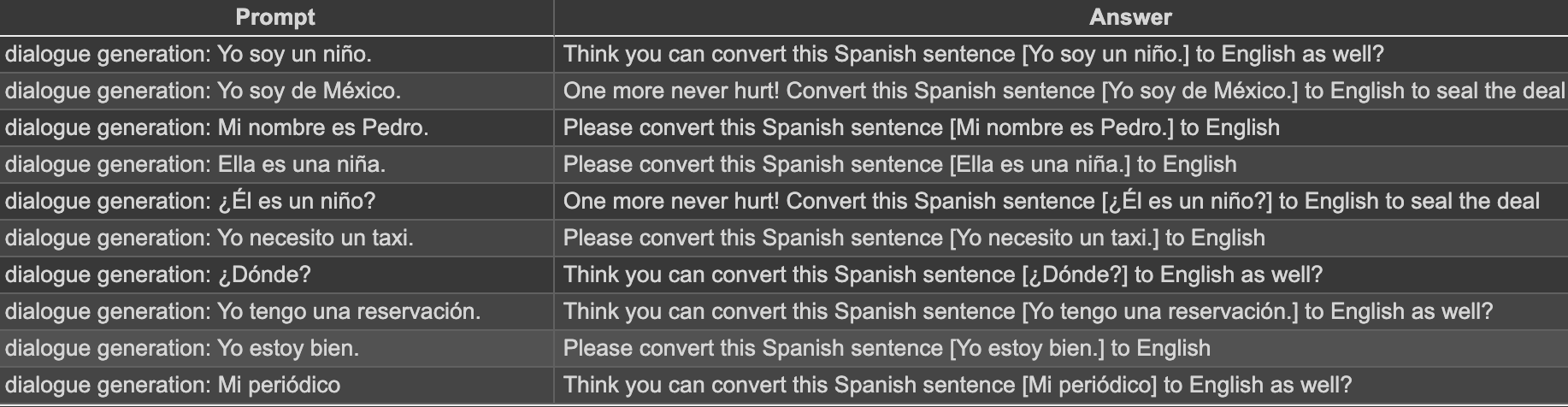

The dataset needs to be formatted in a specific format, which follows a two column structure. The first would be the prompt and the second would be the answer. This allows the model for a customized dialogue generation task and also allows possibly allows for our Reinforcement Learning agent to feed in a prompt whenever necessary. In addition to this structure, we require to add a prefix into the prompt of the data, so as to make the T5 understand the task. In this case, the prefix we chose was "dialogue generation: ". All of this becomes more understandable when you see Figure 4 which provides a concrete example of the tuned dataset. After that, the data, was simply split into train, validation and test sets.

While we did have a tuned dataset, we require a tokenizer to feed it into the model. For this purpose, HuggingFace's AutoTokenizer was used. Here, the maximum input length and maximum target length; which are essentially limits set on the generated sequences while training, were taken as 1024 and 64 respectively.

While we've already covered the input and output sequence lengths, a few ablations were required to reach the following hyperparameters. The batch size was taken to be 64, weight decay 0.01, and since the total dataset was quite huge, we trained it temporarily on three epochs.

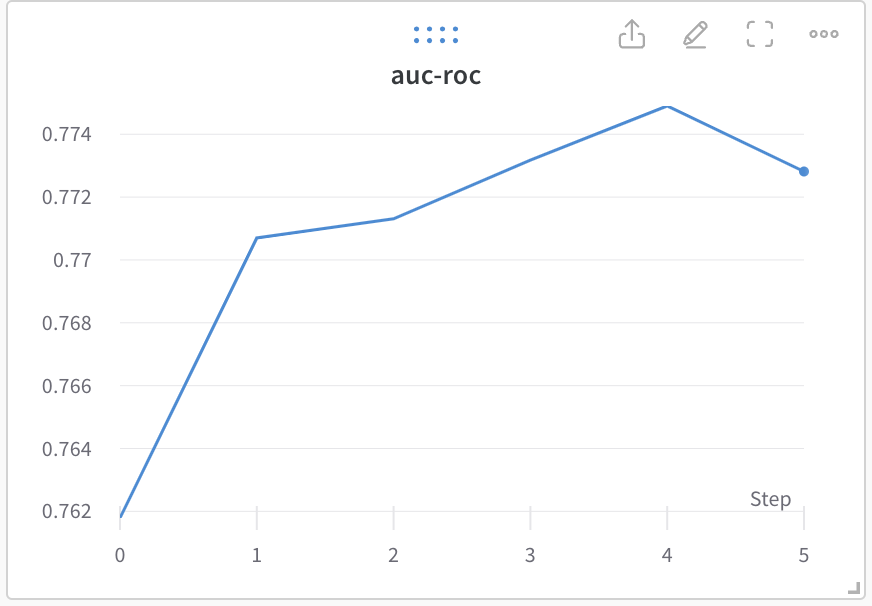

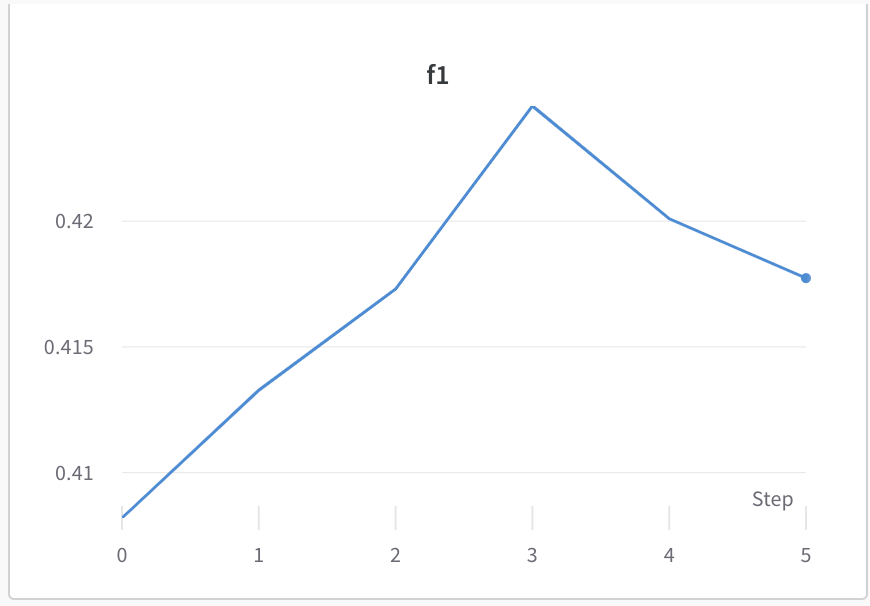

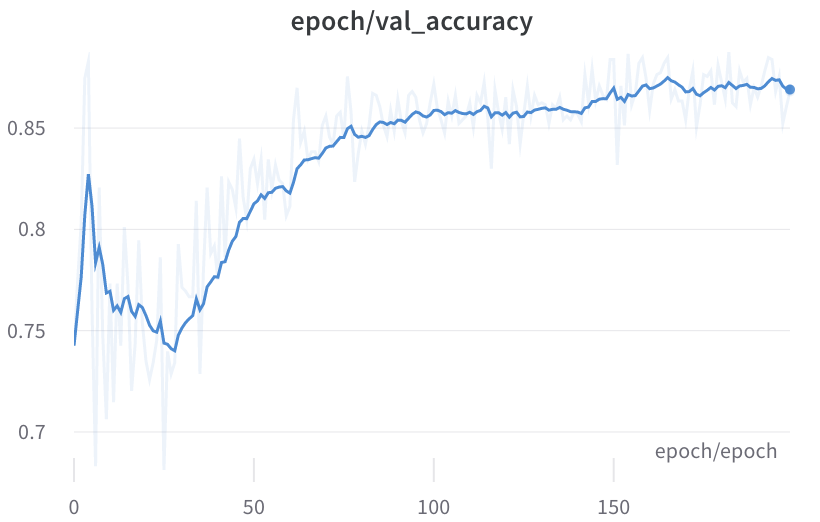

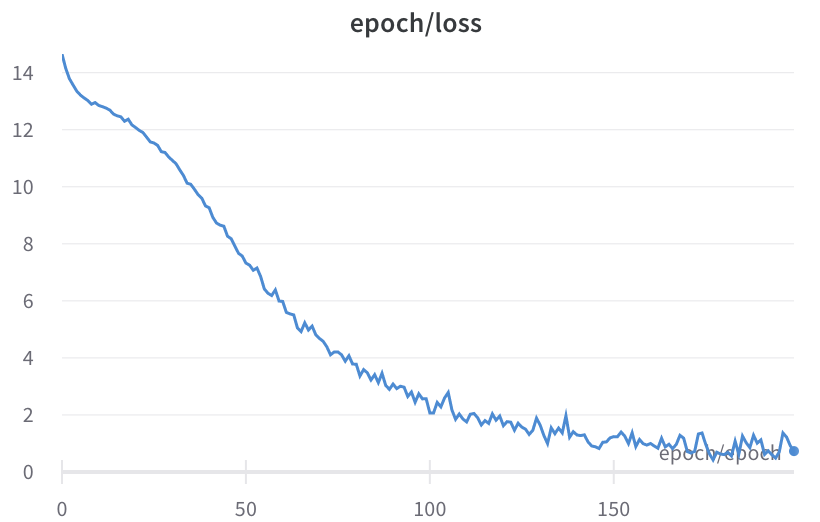

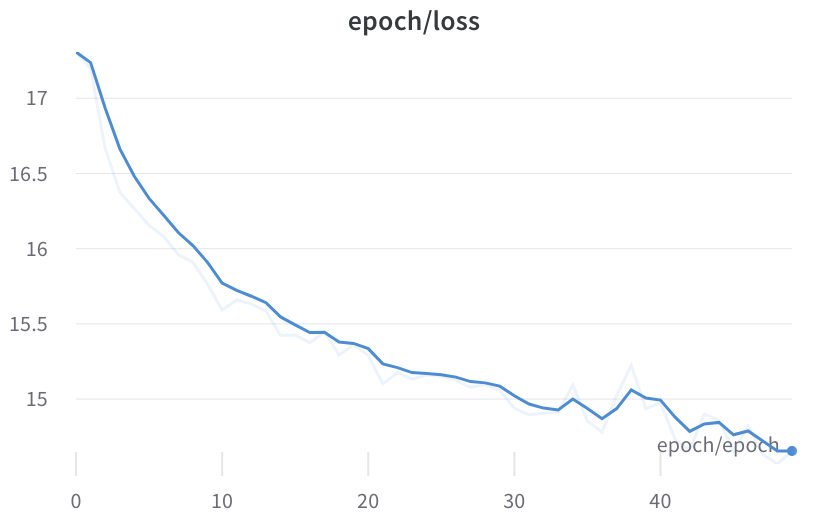

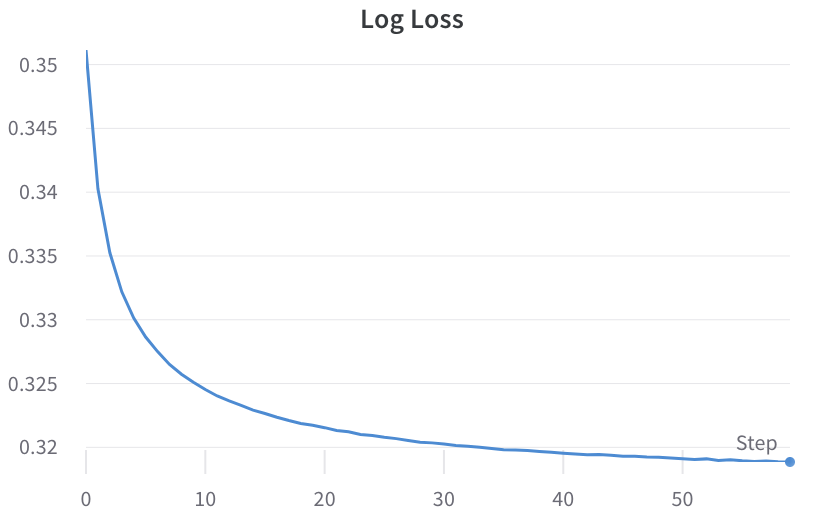

For our Knowledge Tracing we achieve a validation scores 0.4246 (F1 Score) and 0.7732 (AUC-ROC) and a test scores of 0.4311 (F1 Score) , 0.77 (AUC-ROC) for the Duolingo Dataset.

|

|

The above-mentioned are results from the best performing model that we implemented. Apart from the implementation of the knowledge model mentioned above, the following are some of the experiments we tried:

Making use of multiple layers of multi-head attention instead of BiLSTM layers : This approach did not work as well as our current results possibly because of the difficulty in training multiple layers of multi-head attention based transformer blocks from scratch.

Making use of Attention to combine the final hidden states from the encoder into a single knowledge state vector (using a weighted average with the attention weights) : The results from the attention based approach were similar to the results obtained with max-pooling but we could not make use of attention in our final model since the computation of the attention requires the query vector from the decoder (i.e. the representation of the token for which we need to compute the prediction). This does not match with the requirements of the reinforcement learning agent (where we need a single representation of the knowledge state at any given time)

Making use of sum, mean pooling instead of max pooling to condense the final hidden state of the encoder into a single vector: Making use of max-pooling provided us with the best results possibly because a lot of the information in the input is contained locally and making use of max-pooling allows us to most effectively capture all the local pieces of information (as compared to mean pooling which borrows information equally from each time-step)

|

|

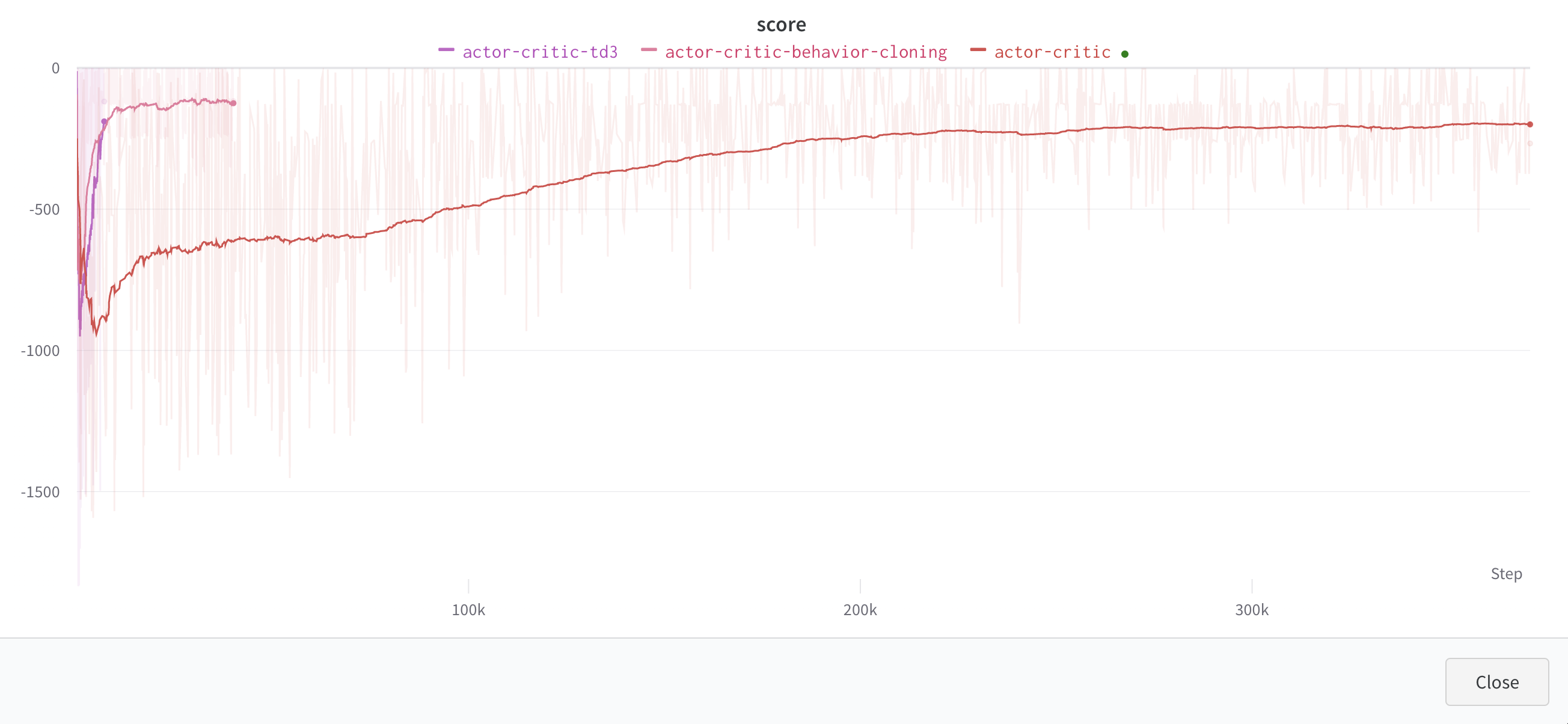

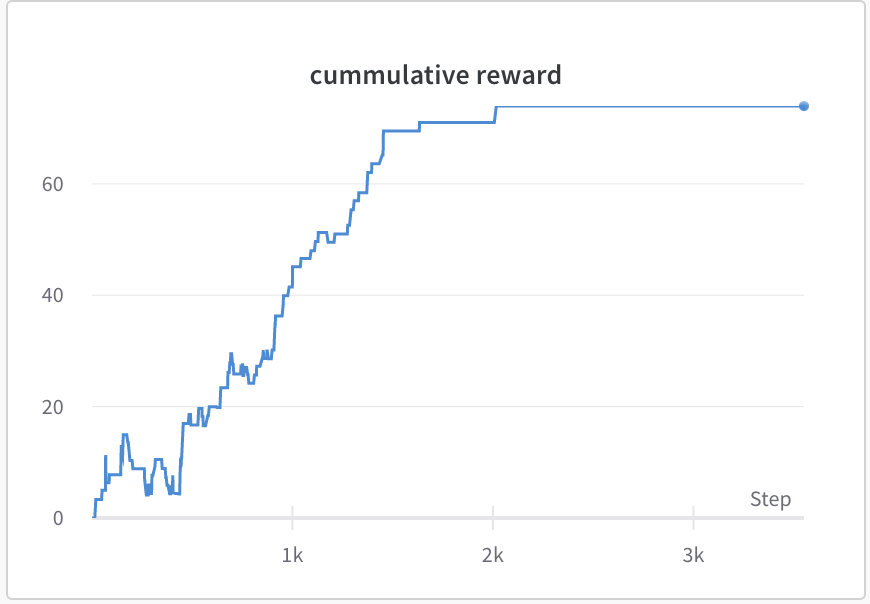

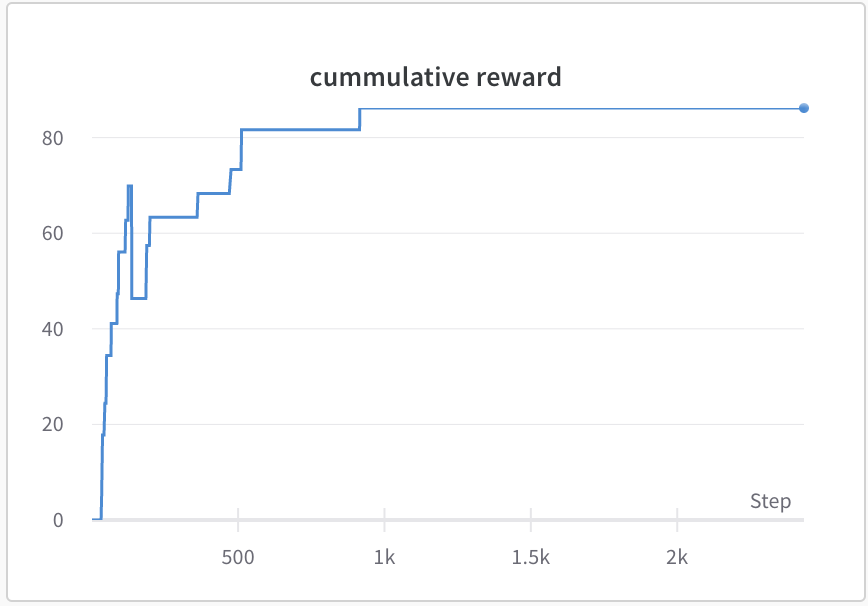

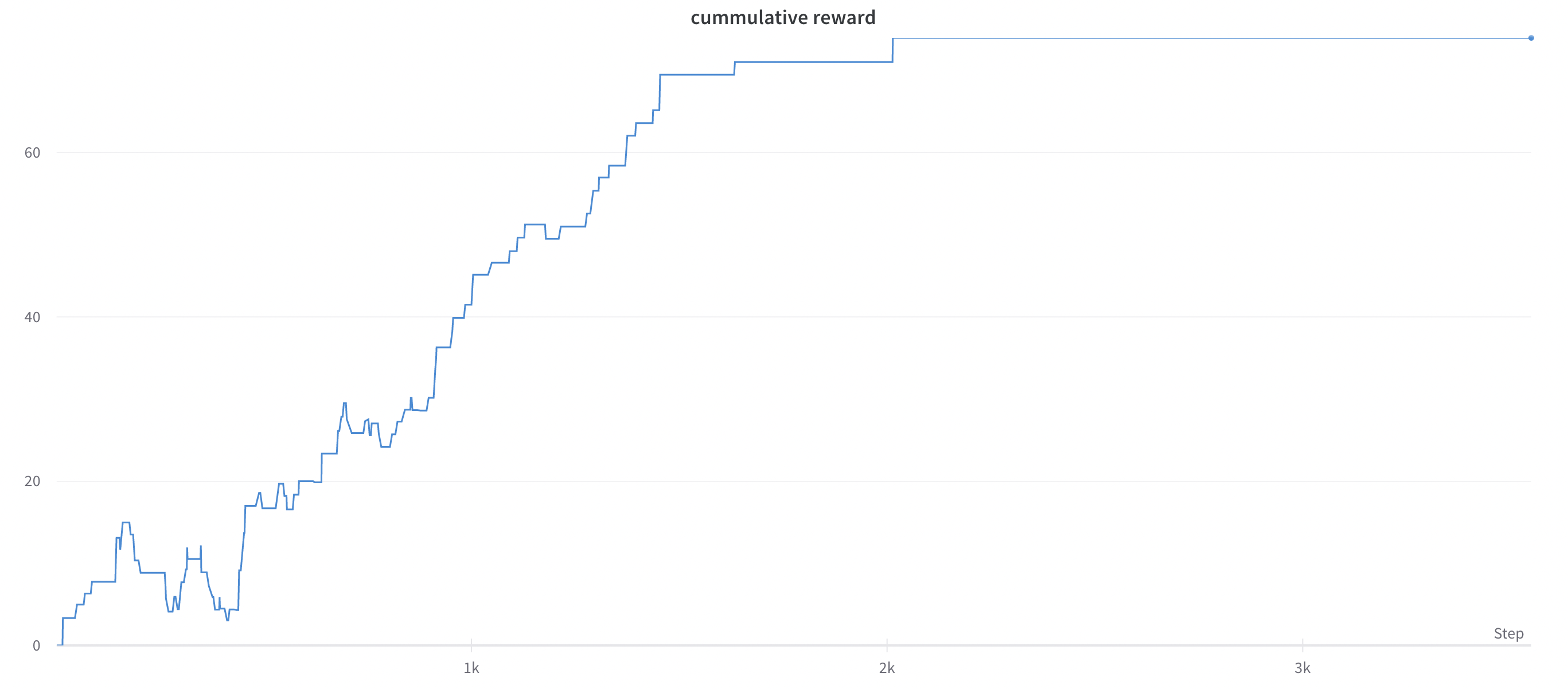

The baseline reinforcement learning agent requires approximately 1500 timesteps to reach saturation.

In contrast, our reinforcement learning module achieves saturation at a greater cumulative reward with merely 300 samples when the knowledge state is provided, and around 500 timesteps when the knowledge state is absent.

By surpassing the baseline approach in both cumulative reward and sample efficiency, our Q-learning based reinforcement learning agent demonstrates superior performance.

Our knowledge tracing model performs slightly below the baseline models. This may be due to the fact that we exclude exercise format and userId features which do not occur in day-to-day conversations, but are included in the baseline models which are specific to Duolingo exercises.

Secondly, in order to input the entire state into our reinforcement learning agent we also compress the user’s knowledge state into one single vector, unlike top-performing baselines which do not use RL agents.

A plausible explanation for the improved performance of our RL agent over random strategy could be that the agent comprehends the relationship between the knowledge state and the sentence-bert embeddings generated for each conversation. This understanding enables the RL agent to optimise its selections of conversation, focusing on the sentences that best enhance the knowledge state.

Given that Reinforcement Learning performed better than random policy (saturating at around 300 episodes), we can conclude that it is possible to integrate with an online knowledge tracing model to better select exercises and improve user learning. This successful proof of concept for a reinforcement learning agent combined with knowledge tracing provides foundation for a multitude of future works. This agent could be fine tuned for more superior performance, and more naturally integrated into a second language acquisition system. Further, we believe that this technique could be used for a variety of tasks beyond just second language acquisition. Any online learning method which can track the known and unknown knowledge from the target knowledge task can benefit from this approach.

Our immediate future work goals are to deeply analyze the RL policy that has been learned by our agent. This will help us further tune and engineer our solution; and possibly better structure future datasets for similar tasks. We would also like to explore different reward strategies for the RL agent in-order to prioritize different exercise selection strategies (such as making sure that there is a balance between unknown and known concepts in the exercises that are selected by the agent). In addition, generalizing to any broad task with a structured dataset would be challenging. Using a generative model to generalize the RL agent to any task could greatly improve the learning experience that results from this method. A generative model that integrates with a knowledge tracing model and RL agent such as ours would be a great further application of our research.

@article{park2021nerfies,

author = {Park, Keunhong and Sinha, Utkarsh and Barron, Jonathan T. and Bouaziz, Sofien and Goldman, Dan B and Seitz, Steven M. and Martin-Brualla, Ricardo},

title = {Nerfies: Deformable Neural Radiance Fields},

journal = {ICCV},

year = {2021},

}The approach described in this project is quite novel and hence there wasn’t any particular baseline that emulated what we intended to do on an end to end basis. For the purposes of reaching current performance metrics of the baselines models corresponding to the individual components (i.e. the knowledge tracing model and the RL based conversational agent), we implemented different sections of the proposed solution separately.

Most recently, Graph Neural Networks have been seen to perform better in knowledge tracing[9]; however, given our dataset, we do not have any graph oriented elements (on a superficial level). We’re not inherently provided the skill-set required for each exercise which could be represented in a graph structure.

That said, we reviewed the winning solutions of the SLAM challenge conducted by Duolingo and saw that the first place solution was an ensemble between an RNN and a Gradient Boosted Decision Tree, which yielded them an AUC Score of 0.861 and an F1 score of 0.561. Hence, we chose this approach as our baseline for Knowledge Modelling and tried implementing various architectures based on that.

The following were the solutions that provided us with the most accurate results:

We did manage to execute an LSTM architecture which was inspired by the work of [7]. They used GloVe [13] for creating the embedding of the tokens (words). In order to predict the label of a particular token in an exercise, they took into account the previous 50 tokens from the exercises that were solved by the user as context. This data was fed into a Simple LSTM to predict the labels for each token. The ablation details of the same have been provided below in section 5.

We also found an interesting approach by [10] which made use of the baseline Logistic Regression provided by Duolingo, but augmented it by taking into account the encoded featurs of the token preceding the current token(that has to be predicted). It was surprising that just taking one timestep’s worth of context without any loss of information provided such a significant boost. The results and ablations of this implementation can be seen in section 5.

We propose using a Reinforcement Learning Agent that, based on the knowledge tracing results of the user, will suggest the next exercises to learn for that particular user; thus making the whole learning experience more personalized. As far as the work is concerned we understand that it is very essential to have a sample-efficient policy optimization to ensure the model provides reasonable performance within small number of user inputs. In the case of language learning this is even more important given the fact that the user’s learned state will continuously change on interacting with new learning modules. And hence, for a given state we will have very few data samples, at max the number of users who interact with the model.

As per our best knowledge there is no work that is using Reinforcement learning for language learning task on this particular dataset. Bassen et. al. [1] use Reinforcement learning scheduler for learning linear algebra.

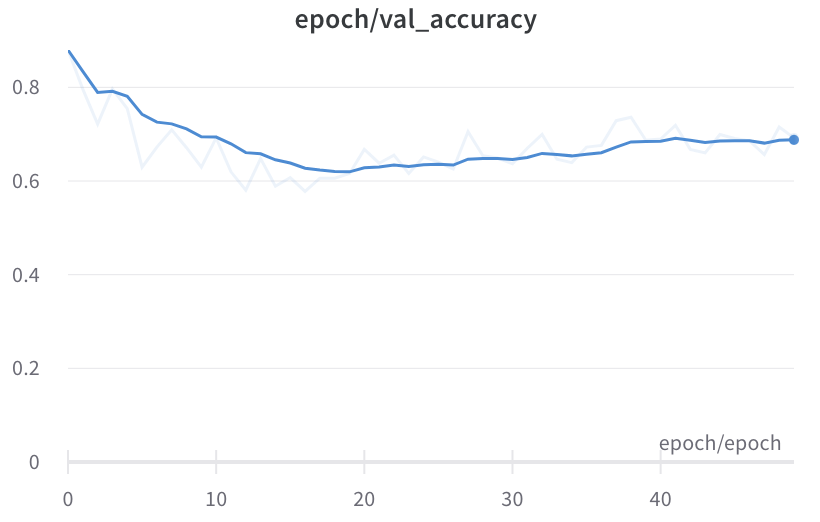

Since we don’t have an existing baseline for the RL based exercise selection, we have implemented a random policy agent; which out of the all the given exercises in the Duolingo dataset - randomly divides it into multiple teaching and testing subset pairs and then tests that policy against a simulated BKT based learner. The results for that can be seen in the next section.

Here are the links of a selected subset of the ablations that we’ve run for this project (along with a short description for the same):

This shows the ablation for LSTM that ran for 200 epochs and provided us with really good insights going forward: https://wandb.ai/idl-s23/DL_4_SLAM-starter_code/runs/d8lu5p8p?workspace=user-vinayn

This shows the ablation for LSTM that ran for 50 epochs which provided us with the best results by far, esepcially where the F1 score was concerned: https://wandb.ai/idl-s23/BaseLine%20Ablations/runs/m5l9b71x?workspace=user-vinayn

This shows the ablation for the augmented Logistic Regression Model that ran for 60 epochs. This was the one that gave us best results for AUC Score: https://wandb.ai/idl-s23/BaseLine%20Ablations/runs/cehsz55d?workspace=user-vinayn

This shows the ablation for the augmented Logistic Regression Model that ran for 30 epochs. Although we ran it with different hyperparameters, the performance was similar to the one with 60 epochs: https://wandb.ai/idl-s23/BaseLine%20Ablations/runs/o6yu27j3?workspace=user-vinayn

This shows the ablation for the BKT (Bayesian Knowledge Tracing) Simulated Learner. The training subset was of size 50 and that of testing was of size 20. Computed on the basis of all the training subsets played to the user. The X-axis is the total number of training subsets played to the user: https://wandb.ai/idl-s23/BaseLine%20Ablations/runs/o6yu27j3?workspace=user-vinayn

As mentioned before, we have two major subsections of the proposed solution:

|

|

|

|

|

|

| Model | Epochs | AUC-ROC | F1 Score |

|---|---|---|---|

| LSTM | 40 | 0.751695 | 0.389982 |

| Logistic Regression | 60 | 0.818203 | 0.354804 |

| LGBM | N/A | 0.6127 | 0.35785 |

| LGBM (Additionally Engineered Features) | N/A | 0.539419 | 0.151575 |

In-order to perform the above test, we divided the dataset into pairs of teaching and testing subsets containing 50 and 20 exercises each respectively. From the above cumulative graph curve, we can see that it takes about 2000 teaching batches/data subsets for the BKT learner to achieve mastery for all the content that is present in the Duolingo dataset.